Large-scale Biodiversity Monitoring Based on Deep Learning

The goal of this application area is to transition from traditional biodiversity monitoring to

automated monitoring systems including field cameras, canopy cameras, mobile tools, satellites,

and drones. An example is Pl@ntNet [Pl@ntNet] is a citizen science project, available as an app,

that helps users identify plants thanks to their pictures. It relies on CNN-based image

representation training and extraction subsequently used for species prediction. Open research

directions in this project concern the possibility to (partially) shift learning and inference from

today’s implementation where both are centralized towards a hybrid, distributed

(HPC/cloud/edge) implementation. This Use Case motivates the need for understanding end-to-end

performance (project objective 2) and better memory management for both for training and

inference (project objective 3). Concretely, in this project we will use the Pl@ntNet application

available at Inria to extract experimental scenarios and to validate the outcome of WP2 through

experiments using Pl@ntNet code deployed on hybrid HPC/cloud/edge infrastructures emulated

on the Grid’5000 testbed.

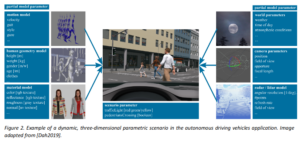

Pedestrian Detection and Intention Prediction in Autonomous Driving Vehicles

The goal of this scenario is the creation and verification of an artificial intelligence system that

will automatically detect pedestrians and predict their intentions, in order to perform emergency

maneuvers to avoid imminent pedestrian collision. The scenario motivates the use of synthetic

training data (project objective 1) as real-world data of pedestrian collisions is unavailable in

sufficient quantity and variety for statistical and ethical reasons. It motivates the reproducibility

aspect of a deployment platform (project objective 2), as the verification of such AI systems will

require repeatable verification. Finally, the scenario motivates the use optimizing compiler

technology and efficient training (project objective 3), as the large variance in the scenario leads

to enormous amounts of both training, and test data, such that efficiency of computations and

memory management becomes a priority. The Use Case is being developed in a series of BMBF-funded

DFKI-research projects (React 2018-2020, Direction 2021-2023, XAINES 2020-2024) as

well as cooperative projects such as KI-Absicherung (part of the large VDA initative on highly

automated driving, 2019-2022) funded by BmWi and LidarShared (2020-2021) funded by BmVi.

Furthermore, DFKI is part of the Acatech platform Learning Systems, in which relevant

contributions to the use case are put forward as well.

Breast Cancer Detection in Fluorescence Microscope Images

The goal of this scenario is the detection of a specific type of breast cancer cells from fluorescence

microscopy images. Using Deep Learning technology, this technique can identify specific subtypes

of cancer in clinical routine (as opposed to research studies), which allows for medication with

very effective drugs that unfortunately have severe side-effects and cost and therefore cannot be

administered speculatively. The scenario motivates the use of synthetic training data (project

objective 1) as real-world data of the cancer cells is not available in sufficient quantity, the

collection of such data is difficult for legal and cost reasons, and the manual labelling (semantic

segmentation) constitutes an infeasible effort. It motivates the reproducibility aspect of a

deployment platform (project objective 2), as the FDA approval of the trained systems will require

studies that demonstrate the reliability and reproducibility of the method. The use case is

developed by our partners at Leibniz INM in the projects HEReHERe (funded by Else Kröner-

Fresenius-Stiftung) and MetGaP, funded by Deutsche Krebshilfe. Funding to involve DFKI in the

lien of research is currently being requested.

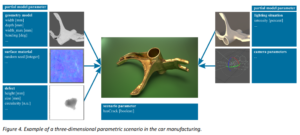

Defect Detection and Classification in Car Manufacturing

The goal of this scenario is the detection of manufacturing defects in a production line, for

example in metal injection molding for gears. Using Deep Learning technology, optical inspection

systems allow the real-time detection of a defect at various positions in the production line, but

requires specific training for each new product. The scenario thus motivates the use of synthetic

training data (project objective 1) as real-world training data is not available at the time of

training, and data showing defects is not available in sufficient quantity because of low defect

rates. The scenario motivates the aspect of understanding how the end-to-end performance of

systems behaves in a real-time scenario (project objective 2) as inspection systems need to keep

up with production schedules. The same argument also motivates the use of high-performance

compiler technology and efficient inference (project objective 3). The Use Case originates from

the industry research project SCORTEX-SOW1, similar approaches are followed in the COGNITWIN

EU Project (870130).